Google cares about how visitors interact with the sites. Website safety and security influence user experience (UX) directly so Google cares about these things

Do you still believe that the search engines care only about keyword strategy? Keywords are not the only factor that influences the SEO of a site. You need to consider other facets of user safety and security while assessing the search engine optimization needs of your website.

Google cares about how visitors interact with the sites, and website safety and security influence user experience (UX) directly so it makes sense that Google cares about these things.

While most web users are vigilant and on the lookout for all kinds of tips and hacks to secure their web activity, security for websites, in general, is a whole different ball game. Most webmasters don't consider site security to be significant enough until they face the threat of a hack.

Internet safety is a big issue and people always look to browse safely without losing their money or reputation. If you are not cautious about the threats visitors may face while browsing your website, you might already be losing a significant volume of relevant traffic and even customers. When it comes to site security, Google's policy is quite straightforward – Google does not care about a site that does not care about its visitors' security!

How does not having an SSL certificate affect your SEO?

Better website security can bring you better traffic and enhance your sales. It is basic math. Ever since Google announced that it will flag HTTP sites, millions of websites are migrating to HTTPS.

The equation between security and Google ranking has become straightforward. Back in 2014, impressed by the user security on HTTPS sites, Google decided to consider it as a ranking signal.

Getting an SSL (Secure Sockets Layer) certificate is an easy process. It can extend encryption to the user's browsing history and client-side information on the browser. HTTPS sites have an extra SSL layer that encrypts the messages between the client-side browser and the server.

An SSL certificate inspires confidence in the buyer to purchase a product or service from a website against his/her credit card or debit card. It will strengthen the trust between your users and the website. That is just the kind of signal Google is seeking from websites today!

How can bad blog comments affect your site performance?

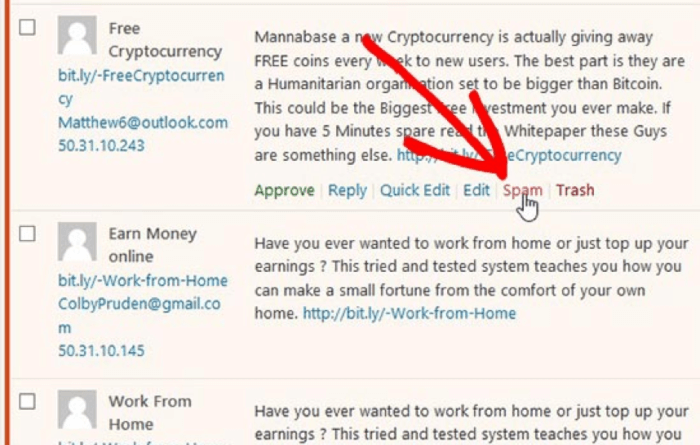

If you have owned or managed a blog, then you must have come across spam comments. Managing spam comments has become a ubiquitous aspect of running a blog.

Negative or spam comments on a blog don’t necessarily come from human users. Black Hat SEO techniques enable posting of random spam or negative comments via automated and semi-automated bots. They can visit thousands of websites per day and leave spammy links.

When users do not follow the scores of links the bots post, it affects the ranking signals negatively. Google considers every link on your site.

Spammy links that can lead to potentially dangerous terrains of the web-world can negatively impact the traffic Google is sending your way.

Google considers the association of each brand and website before deciding how qualified they are to receive credible traffic. Therefore, discrediting a website is easy, as all it takes is posting a few automated spam comments in the blog section.

How to prevent this? Do the following:

- Make sure the ‘nofollow’ attribute is added to links mentioned in comments (WordPress does this automatically, you need to check for any other CMS you may be using).

- Install plugins such as Akismet to prevent spam comments.

- Put in manual process checks to ensure user-generated content isn’t spamming your website.

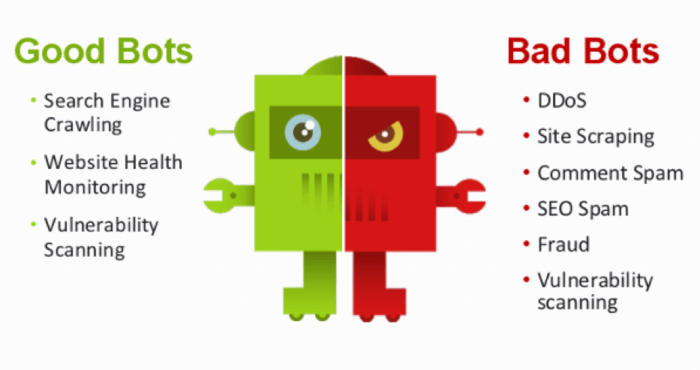

What are bad bots? How can they negatively influence your SEO?

Bad bots or malicious bots can steal unique content from your website. That can include pricing, customer information, and vendor information. Other forms of bad bots can also post spam comments as we have discussed in the previous section.

These bots can harm your site’s SEO in the following ways –

- Web scraping – Scraper bots can copy content with the intent of plagiarizing. Google can penalize the original owner of the content and push your site down the SERP.

- Price scraping – The price-scraper bots can steal real-time pricing data from business sites. They can decrease customer visits by offering duplicate content at another location.

- Form spamming - These bots spam a website via the submission of fake forms repeatedly. It generates false leads.

- Interference with analytics – Skewed website analytics is a classic example of trouble caused by bad bots. They generate around 40% web traffic, and many analytics platforms are unable to distinguish between human users and bots.

- Automated Attacks – Automated attacks are the forte of bad bots that mimic human behavior. They can evade detection and pose security threats, including scraping credential info, exhausting inventory, and taking over accounts.

How to block malicious bots?

Do the following:

- Implement CAPTCHA to ensure bots aren’t able to submit fake requests.

- Hire a CSS expert to implement hidden fields in page content (human users don’t see these fields and hence don’t fill them, but a bot will fill it with values, and hence, be found out).

- Do a weekly review of logs (unaccountably large number of hits from a single IP generally indicates a bot attack).

Malicious bots rarely stick to standard procedures during web crawling. Each bad bot can have different modus operandi. Your actions should depend on the type of bad bot you are dealing with.

Locating and flagging down duplicate content

Manual action is most effective against scraper bots. Follow your trackbacks and backlinks to check if any site is publishing your content without permission.

If you chance upon unauthorized duplication of your content immediately resort to a Google DMCA-complaint.

Running reverse DNS checks on iffy IP addresses

Run a reverse DNS check on the suspected IP address. While the good bots should turn up recognizable hostnames like *.search.msn.com for Bing and *.googlebot.com for Google.

You should block other bots that ignore robots.txt on your website. It is a telltale sign of malicious bot activity.

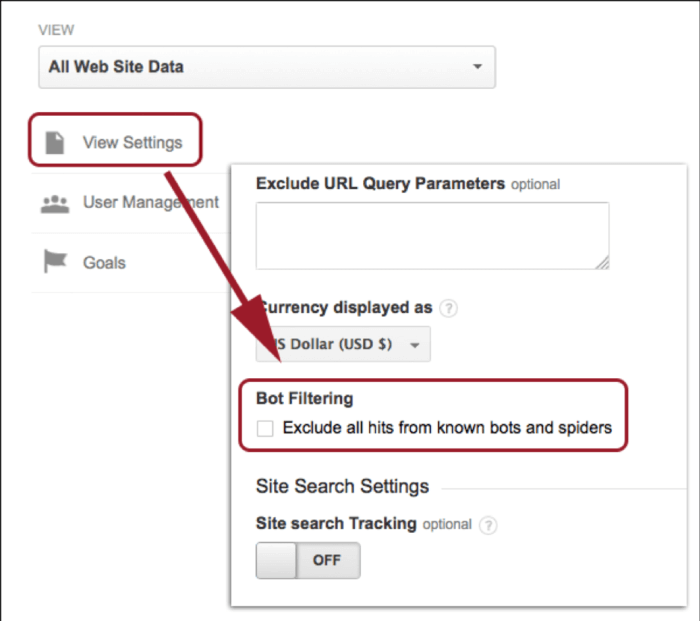

Set up bot filters via Google Analytics

You can block known bots by using Google Analytics.

Go to Admin > Select All Website Data > go to Create New View > define your time zone > check on Bot Filtering.

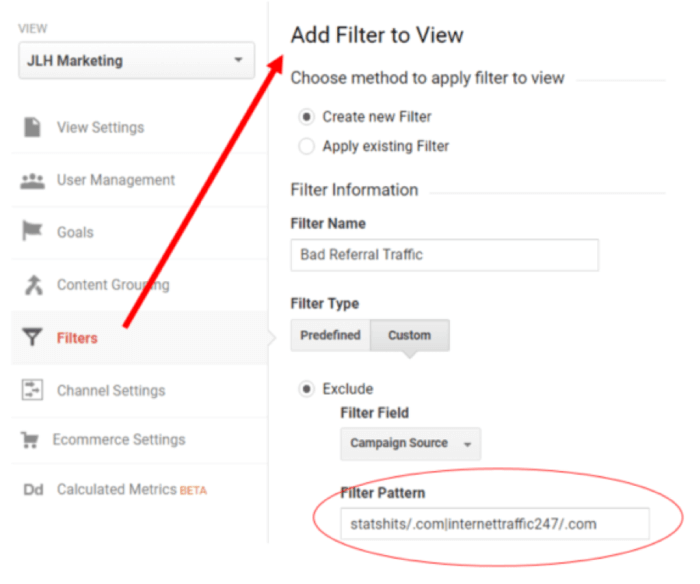

Adding a spam filter

You can modify and add a spam filter to prevent the activity of malicious bots on your site.

Use Google Analytics advanced filter to determine which sessions went beyond the stipulated threshold. Remember that the number will vary between sites depending upon their daily traffic inflow and type of visitors.

Setting up a referrer filter in Google Analytics

You can also block bandit bots by setting up a referrer filter. Here’s how:

Go to Admin > Go to View > click on Filters > Go to Add Filter to View > Click on Custom and then on Exclude > Go to the Filter Filed > Click on Campaign Source

Fill in the suspicious domains in the Campaign Source section.

How can you keep your website safe for human users?

Apart from SSL and blocking bots, you need to consider your security plugins that are in place right now. When was the last time your team updated the plugins? Older versions are vulnerable to bots, malware attacks, and hacks. Monitor your website frequently and set up firewalls to block dubious users permanently from your site.

You need to update your website theme. A study by WordPress team members shows that more than 80% of all hacks resulted from lack of theme updates. Update your theme and plugins as soon as the security patches come into the market. It might seem trite, but they are indispensable in maintaining website security in the long run.

Other than the steps we have mentioned above, you can be proactive and adhere to the preventive measures to prevent hacks:

- Update your CMS software

- Use a strong password manager

- Set strong passwords and change them frequently

- Take regular backups of your site

- Get a malware scanner for your site

- Monitor your website backend access

- Scan for vulnerabilities and monitor them regularly

- Monitor all surges in traffic

- Invest in a content delivery network (CDN)

- Reroute traffic through an application firewall to block bots

While SEO is an ongoing process, so is website security. Each day hackers are trying to overcome the security firewalls adopted by websites, and each day software engineers are coming up with new ways to prevent their access.

Final thoughts

Over 200 ranking signals affect website SEO and ranking. Security is definitely among them. Prioritizing on-site security is a win-win situation for website owners, visitors, and Google SEO. It is a smart investment that can take your website to the pinnacle of success in the long run.

Joydeep Bhattacharya is a digital marketing evangelist with over 12 years of experience. He maintains a personal SEO blog,

SEOsandwitch.com and regularly publishes his works on SEMrush, Ahrefs, Hubspot, Wired and other popular publications. You can connect with him on

LinkedIn.