A Customer satisfaction survey (CSAT) allows you to measure customer experience, how much a customer would recommend your business and satisfaction with your brand

There are some simple rules to follow when creating a customer satisfaction survey which gives you high-quality insights, accurate data and actionable findings, whether it's the metrics you include or question types which can enhance the feedback.

In our Quick Win on how to create an effective customer satisfaction survey, we go into more detail about metrics and question types, how to analyse and interpret results and even how to project manage the process.

In this blog post, we'll explore some effective question types which can help you pull together a useful CSAT.

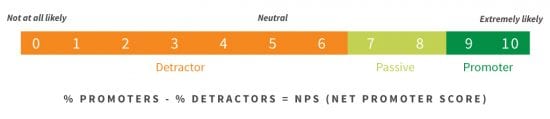

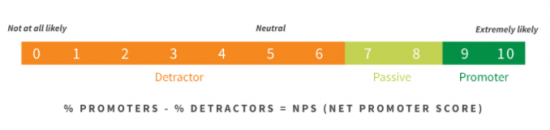

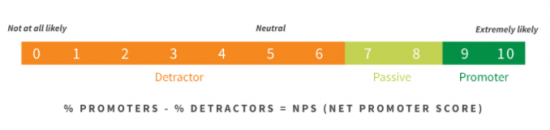

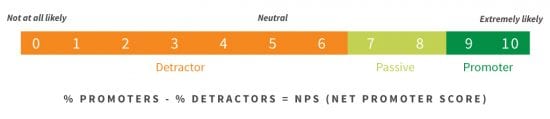

Net Promoter Score (NPS)

You might be familiar with NPS as it’s grown massively in popularity amongst marketers globally. It’s a low effort and generally excellent indicator of how a business is doing. It’s especially worth adding NPS to CSAT questionnaires as this is an important measure of business health for you to monitor over time.

The typical NPS question asks if a customer would recommend the business on a scale from 0 (not at all likely to recommend) to 10 (Extremely likely to recommend).

Respondents are then grouped as follows:

- Promoters (score 9-10) – those who are brand loyal

- Passives (score 7-8) satisfied but may go to competitors

- Detractors (score 0-6) are unhappy customers who may spread negative word-of-mouth

Source: https://www.netpromoter.com/know/

NPS question template:

[SCALE]

How likely are you to recommend this company to a friend, colleague or family member?

- 10 - Extremely likely

- 0 - Not at all likely

Other metrics

Customer effort score is a great way of understanding an experience as it assesses how much effort was required to do something.

Whilst NPS asks if a customer would recommend the business and other customer satisfaction questions ask specifically about satisfaction, CES asks customers to rate their experience.

A typical CES could look like this:

Overall, how easy was it to [do the desired task]?

Answer options typically include:

- Very difficult

- Difficult

- Neither

- Easy

- Very easy

CES is best shown to customers after a customer touchpoint.

Web usability score (WUS) or sometimes called System usability score (SUS) assesses web usability, whilst not specifically about satisfaction it helps you to understand your web user experience.

Participants are asked to rate a website or system as follows:

- I think that I would like to use this system frequently.

- I found the system unnecessarily complex.

- I thought the system was easy to use.

- I think that I would need the support of a technical person to be able to use this system.

- I found the various functions in this system were well integrated.

- I thought there was too much inconsistency in this system.

- I would imagine that most people would learn to use this system very quickly.

- I found the system very cumbersome to use.

- I felt very confident using the system.

- I needed to learn a lot of things before I could get going with this system.

Usability.gov has a great article on how to analyse and interpret the score.

In each of the section below, I have grouped together survey questions which you can ask based on understanding experience and satisfaction.

Understanding experiences and satisfaction

Questions you can ask could revolve around:

- In-store or online customer experience

- Checkout process feedback

- Satisfaction with customer support team

- Satisfaction with the product or service

Here is an example survey you can use:

Start with an NPS Score and then move into usage - you could ask why they gave the score they did for the NPS question for further understanding.

[SINGLE CHOICE]

Overall, how satisfied are you with [brand name/ business name?]

- Very Satisfied

- Slightly Satisfied

- Neutral

- Not very Satisfied

- Very dissatisfied

- I'm not sure

[SINGLE CHOICE]

How often do you use us when you need [insert]?

- Daily

- Weekly

- Monthly

- Annually

- Less frequently

- Never

How reliable do you find [brand name/ business name]?

- Very reliable

- Slightly reliable

- Neutral

- Not very reliable

- Not at all reliable

- I'm not sure

How well does [name] meet your needs?

- Very much meets my needs

- Slightly meets my needs

- Neither

- Doesn't meet my needs much

- Doesn't meet my needs at all

- I'm not sure

[OPEN END]

Please tell us why you gave that answer.

You could then ask them to rate:

- Quality

- Value for money

- Delivery

- Customer service team

- Any other contact with the business

- How long they have been a customer

- How likely they would be to use you again

- Any other comments

Find out about planning a survey, incentives and distribution survey for a good response rate in our Quick Win.

Conclusion

Good research comes from good questions and great survey design. Remember not to make your survey too long, don't ask leading questions where an answer is probed or influenced and make sure that you include a progress bar to show participants how much of the survey they have remaining. It's good to include multiple questions on one page, but spreading questions out over various pages is even more effective as it can reduce fatigue. You may also want to include survey routing where participants are shown questions relevant to their answers. Make sure questions are relevant to the person, for example, make sure that if customers haven't used your product much there may be many questions that are not relevant to them.

Survey tools I recommend for CSAT's include: