Heed this advice to avoid typical pitfalls

I think Danish physicist Niels Bohr said it best: “An expert is a person who has made all the mistakes that can be made in a very narrow field.” Having planned, analyzed and reported on over 700 user test sessions, and made lots of mistakes in the process, I certainly meet this definition.

But, by reading and heeding my advice here, you won’t have to repeat the same mistakes I made - and you’ll hop a few steps up the user test (UT) learning curve.

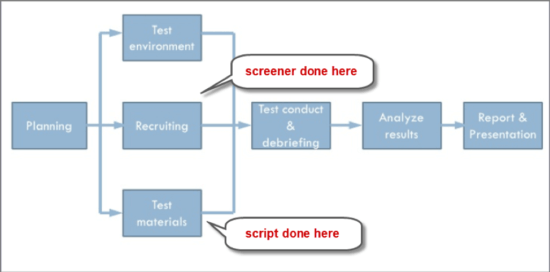

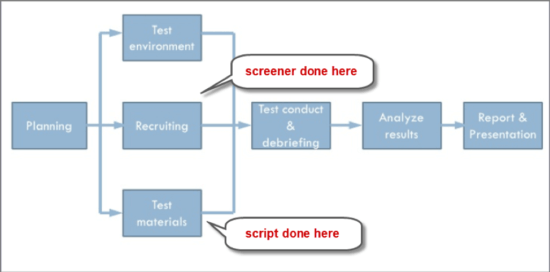

1. Write a rock-solid test script

Ever watched a movie that had lots of great actors in but turned out to be terrible? (I certainly have!) Then you know the importance of a well-written and edited script. That’s especially true for user tests and doubly true for unmoderated user tests, which comprise over 80% of UTs done these days.

So, after first firming up your test objectives and scope, invest some time in writing a solid script, which includes:

- Test introduction

- 1st scenario

- Set of tasks

- 2nd scenario

- Set of tasks

- Etc.

- Wrap-up questions/questionnaire

For more involved research, like ‘path to purchase’ journey research, these scripts get much more involved. But these are the “core” parts.

When writing your script, make sure that:

- You’ve done enough to ease your testers’ anxiety (just the word ‘test’ can trigger some fear, so use the word ‘study’ instead).

- Your scenarios flow in a logical sequence and progress from lower to higher difficulty (so your testers don’t fail and bail-out of the test early).

- You’ve provided all the information (e.g. form entries) needed to complete tasks.

- You have “tested your test script” (to make sure it flows smoothly and is error-free).

If you’re a “problem child” and need consequences to take action, writing a bad test script can result in:

- Needing to rerun the test (or at least wasting a couple of participants).

- Having difficulty coding the script questions and logic on your testing platform.

- Not gathering all the usability feedback you need (relative to your test objectives).

- Wasting valuable time and budget.

You even risk lowering your professional credibility, which no one wants to do.

If you’re not a good writer, hire one!

One thing I’ve learned in life: it’s best to focus on what you’re best at and let others do the other things.

So, if you’re not a strong writer, admit that early on and hire someone who is. If you’re running the test yourself, network around to find a writer within your organization. Or hire a freelance copywriter with a strong reputation.

If you’re running your test on a platform (Usertesting, Userlytics, etc.), leverage the writers and analysts on their professional services team. By doing so it’s 95% likely the first draft of your script will be good.

Lastly, make sure your script focuses on a specific point in your customer journey. A very common mistake I see: clients trying to assess too much in the same test. This makes the script, and the test, too long. You can always run another test next week or month (and it’s usually better to use this test-iterate-retest approach anyways).

2. Don’t cut corners on your recruitment screener

I sometimes hear comments like, “I just tested it with my friends and family,” or “I tested it with my team members.” That’s fine if you’re testing a minimum viable product (MVP), or doing a formative study on a website or app with a smaller user base.

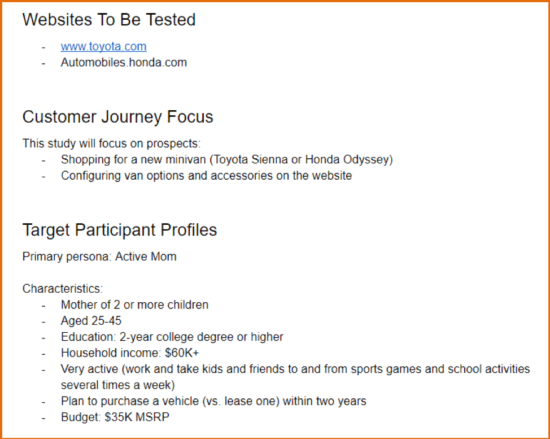

But if you’re testing a site or app that you will launch to a larger market, you need to bring in a representative set of participants — that is, participants who have the demographics, experience, mindset, and motivations of your target users. If you don’t, you may get a lot of feedback, but it won’t be valid.

Download our Business Resource – Web design project plan template

Even if you are using project planning software our template will still give you a checklist to review the key activities that need to be managed.

Access the Web design project plan template

Another common screening mistake I see: testing with participants who know too much. Remember, real-word users typically know nothing about the product or service you offer and have not previously experienced your website or app. So don’t test with subject matter experts or software developers; they know much more and usually try harder than non-technical people.

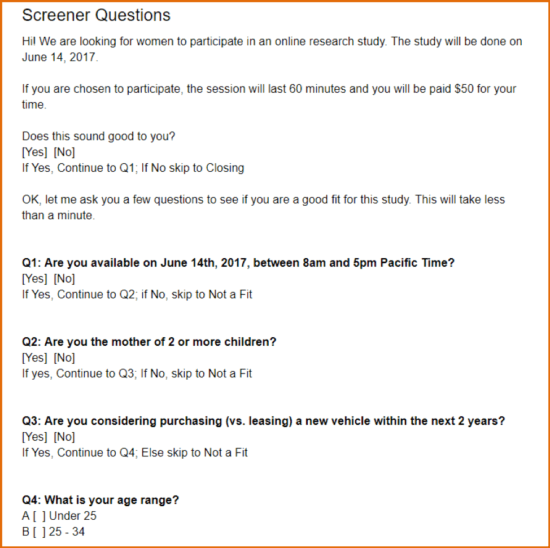

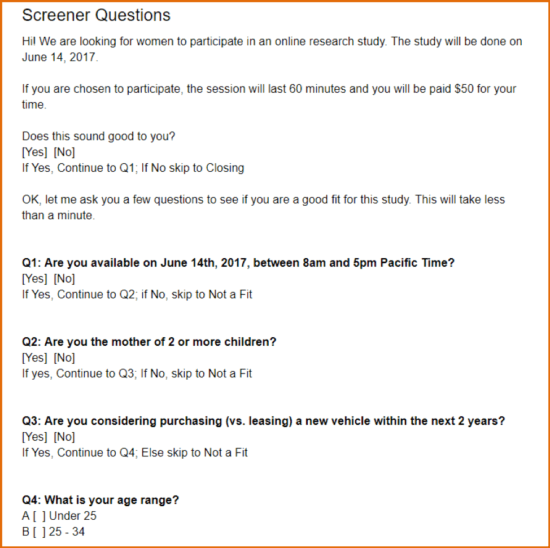

List out the “must-have” characteristics your testers must-have, followed by the “nice-to-haves.” In your recruitment screener, eliminate prospective testers who don’t match all the must-have criteria. Of course, if you’re testing with two or more user segments, you’ll have multiple sets of these criteria, and may need to include question “branching” logic.

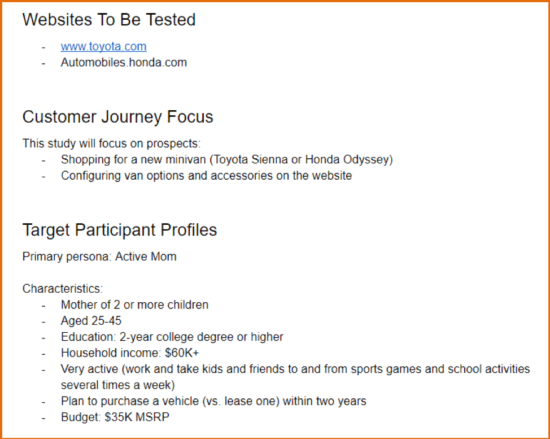

Below I show some sample screening questions, in this case for a “configure your new minivan” user test.

Except for some special cases (like when you need to recruit professionals or other people with specialized skills or experience), it doesn’t cost more to ask more screening questions. So build them in from the start to minimize the chances of recruiting “miscast” participants.

Recruit some extra testers

Recruit 10-20% more testers than your requirements dictate. Why? Because no matter how good your screener, some respondents with either a) just plain when answering the screener, or a) not be good testers (share enough of their thoughts, follow your script instructions). Or, after running the first couple sessions, you may discover that something’s wrong with your script.

Let’s say you’re running a qualitative UT with 10 participants. To make sure you get 10 “good completes”, recruit 11 or 12 testers.

If you’re recruiting more participants — for example, 40 for a quantitative test — you should recruit 4-8 extras. Four should be enough if you “test your test” before fully launching it. More on that lesson later.

True, you won’t always get bad testers. But it happens enough that I’m willing to pay 20% more up-front to avoid having to do extra recruiting, or suffer from a late-in-the-analysis-game “data deficit.”

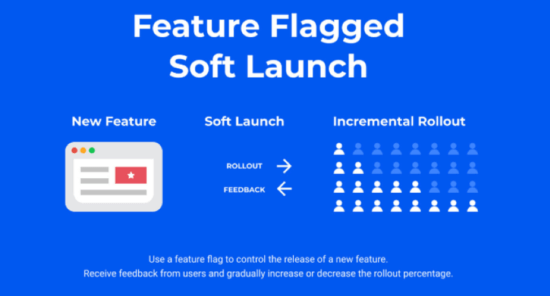

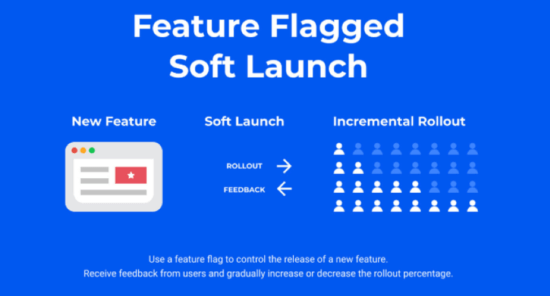

3. Run one to two pilot tests before fully launching your test

Think about it: “quality assurance” (QA) and “beta” testing are a standard part of the website and software development process. So why shouldn’t you also QA your user tests? It’s a proven way to mitigate test deployment risks.

First, before you run your first “pilot” or “soft launch” test, make sure you’ve done your own diligence by:

- Reviewing your script on your test platform (if you’re using one) to make sure the question logic and branching are solid.

- Running through the test script in “tester view,” and fixing any issues that arise.

- Double-checking that the script questions and tasks map back to your test objectives.

Once you’ve done this, “soft launch” your test. That is, launch it with the first participant only. Note issues that arise — with the script, screening criteria, or otherwise — and tweak things accordingly. When you’re sure the task wording’s clear and the question flow’s solid, fully launch the test to all testers.

A more agile (though somewhat riskier) alternative is to:

- Fully launch the test

- Monitor the first couple sessions for issues

- Quickly fix the test setup if issues arise

With this approach, if everything looks good, you don’t have to spend time relaunching the test. But you’ll need to keep a close eye on these tests, especially if they’re unmoderated, because with testers quickly “accepting” new tests, a few sessions may complete in less than an hour.

So either “launch one and pause” or “launch and monitor” based on your risk tolerance and testing workload.

Apply these learnings and avoid anguish

There’s no teacher like experience. And the emotional anguish that results from making public mistakes. The good news is, by heeding this advice you won’t have to make the same ones I’ve made.

Most importantly, you’ll spend more of your time collecting and sharing great user insights and building your “UX research rockstar” reputation.