4 techniques to reduce duplicate site content

Although we still hear a lot of recommendations on how to optimise pages and the importance of links from other sites to improve rankings, we don't hear so many recommendations about reducing the amount of duplicate content. This seems to be a neglected area of SEO advice, so this refresher highlights some of the techniques to avoid duplicate content.

What is duplicate content?

Duplicate content is where different pages on a site are interpreted as similar by Google. As a result, pages can be discarded or down-weighted in Google's index so that they won't rank. In its webmaster tools guidance, Google says:

Duplicate content generally refers to substantive blocks of content within or across domains that either completely match other content or are appreciably similar. Mostly, this is not deceptive in origin. Examples of non-malicious duplicate content could include: discussion forums that can generate both regular and stripped-down pages targeted at mobile devices; store items shown or linked via multiple distinct URLs and printer-only versions of web pages.

We would add that, more importantly for site owners, category, product or service pages can be interpreted as identical because the pages are too similar. You may be able to see this within the analytics if you have some product pages that aren't attracting any natural search traffic.

To reduce this problem here are my recommendations for dealing with duplicate content that you could check with your agency / in-house team. Numbers 1, 3 and 4 are most relevant typically

1. Show Google pages have distinct content

This is straightforward, but you have to make sure the page editors creating the content are aware of how to do this. It's normal on-page optimisation good practice. You should set unique titles, meta descriptions, headings and body copy for each page you want to rank. Each page should have a focus subject or main keyword you want to target. Brief your copywriter to stay focussed on that subject is key to ensuring your pages are unique.

To check that your pages don't have problems with duplicate titles or descriptions you should check out the HTML improvements section in Google Webmaster Tools which will flag pages with problems of identical titles or desctiptions for you.

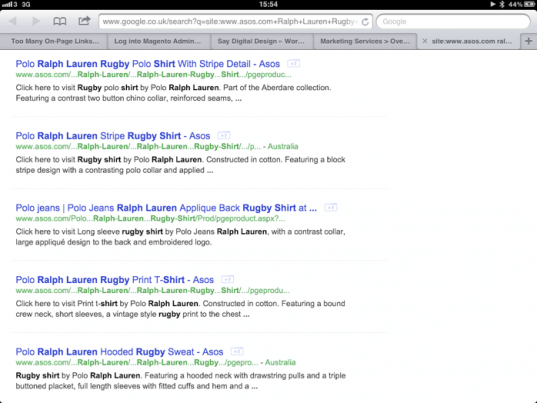

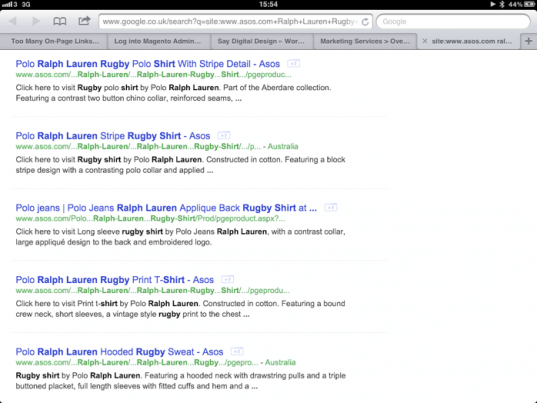

Here's an example of where ASOS are doing a good job of keeping page titles and descriptions unique:

While the products are very similar ASOS has kept page titles and descriptions different. To arrive at these results I searched specifically on Asos.com for "Ralph Lauren Rugby Top".

2. Robots.txt – exclude crawlers from duplicate content

An established tool in the SEO toolkit, robots.txt is a powerful text file located in the root of your website. By using the robots.txt file, site owners can instruct specific search engines or all of them to ignore specific pieces of content. The example below would block any files within the print-versions directory from been indexed by Google.

User-agent: Googlebot

Disallow: /print-versions/

If you choose to you can utilise the Meta Tag to specify whether a particular page should be indexable to search engines, an example of what you would include in your header would be:

<meta name="robots" content="noindex,nofollow" >

You can read more about utilising the meta tag here if you need to.

3. 301 permanent redirects

301 redirects are a common way of making sure visitors are directed to the right page, for example, if a page is turned off because the campaign has finished you can redirect people to your homepage so they do not see an error page and bounce off. A 301 also tells search engines that this page has now permanently moved to a new location. This is of great use if you need to move from older versions or duplicate versions of webpages. For example, sometimes sites have similar, duplicate versions of the homepage. A 301 will ensure Google finds the new page and also passes on as much of that pages rank it feels relevant.

Another common use of a 301 redirect is to redirect non "www" traffic as per the example below:

http://example.com > http://www.example.com

4. Use rel=“canonical” or exclude parameters in Google Webmaster Tools

The canonical tag is becoming a widely used method for instructing search engines of duplicate pages & more specifically which URL is the primary. I wrote specifically about implementing canonical tags on ecommerce sites previously.

The tag should look like this in the HEAD section of your html page specifying the preferred version of duplicates.

<link rel=”canonical” href=”http://www.example.com/product-1.html” />

The tag alerts search engines that it is a duplicate page & also informs them where the primary page is located!

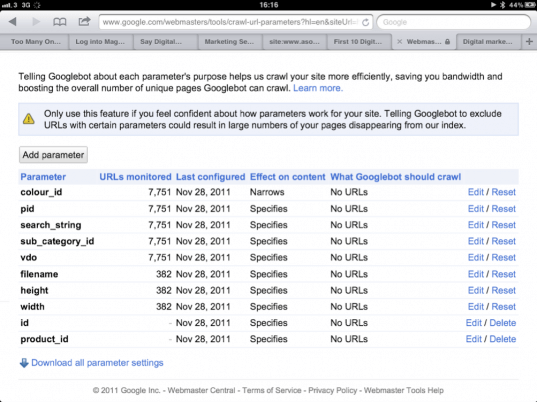

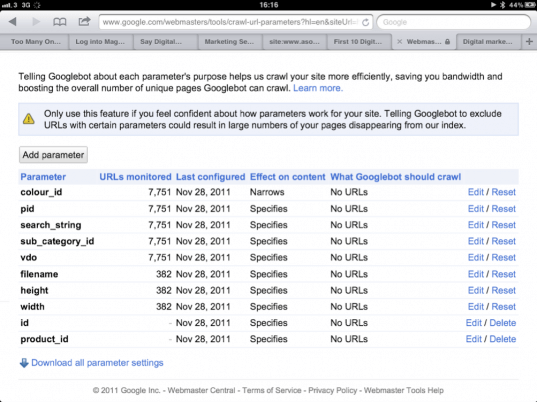

A more recent feature that Google has launched within the Webmaster Tools area is the ability to inform Google of URL parameters to exclude on your website where the page content is similar. This will then let you determine whether Google should index the content or not, see example below:

This solution is often particularly useful on Ecommerce sites where duplicate content can occurs when sites make the same content available via different URLs in a faceted search for selecting products or by using session IDs or affiliate parameters, like this:

http://www.example.com/products/women/dresses/green.htm

http://www.example.com/products/women?category=dresses&color=green

http://example.com/shop/index.php?product_id=32&highlight=green+dress&cat_id=1&sessionid=123&affid=431

In these cases you would specify "category", "product_id", "highlight", "sessionid" and "affid" as parameters.

From this post you can see there are a quite a few way duplicate content can occur and a range of tools to help manage it. So next time you're speaking to them, ask whoever is responsible for your SEO about the steps they take to minimise duplicate content.