5 DIY options for identifying duplicate content to improve SEO

SEOs talk a lot about why duplicate content matters and what to do about it, marketers don't tend to.

SEOs talk a lot about why duplicate content matters and what to do about it, marketers don't tend to.

I think an understanding of duplicate content is important to all marketers who manage SEO so they can ask the right questions of their search specialists or agencies or fix the problem themselves. So to help bridge the gap, this is first of two posts explaining what duplicate content is, why it matters and what to do about it.

What is duplicate content

Duplicate content is where a search engine identifies different pages as similar. As a result it either doesn’t include them in the index or down-weights their importance. So you may have pages that you think are unique and should attract visits, but the search engines don't see it that way and pages identified as duplicate content wont attract any visits - that's why they matter. The reason for this is that search engines like Google can crawl over one trillion URLs, but they only want to store and rank the more relevant ones

Duplicate content on your own site is usually the biggest SEO problem, but it can potentially be a problem where other websites are copying your content for their own gain (it can be innocent and most would not realise the potential impact).

In this post I'll focus on identifying duplicate content on your own website. If you are concerned about your content been copied by others you can use services like CopyScape to review that.

How to review and manage duplicate content

Google primarily identifies duplicate content through pages which have identical/similar titles, description, headings and copy, so to start with you have to audit these. You can use any of the following methods to help find potential duplicate content issues, the reality is that it will likely require several of them to do an effective job.

Option 1. Use Google Webmaster Tools

This is the best starting point. If you don't have a budget for SEO then site marketers can do this on their own.

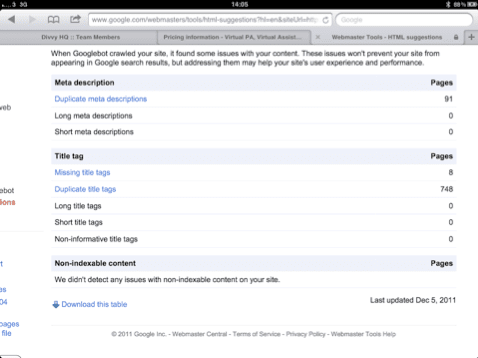

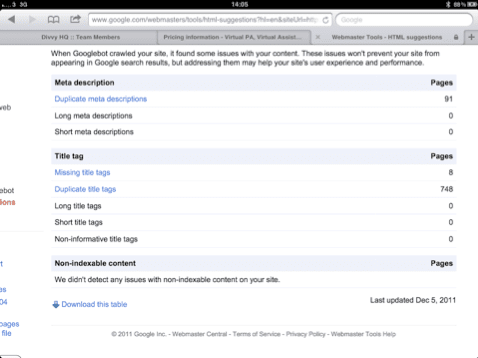

Once your site is verified within Google Webmaster Tools you can navigate down the left to diagnostics & then into HTML suggestions where you will see a information on duplicate title / description tags through to those pages with long titles or descriptions.

If you have duplicate page titles or meta descriptions as in this example you should review these to see whether the pages should be targeted uniquely.

Option 2. Utilise the SEOmoz Tool kit

Use the SEOmoz tool to find duplicate content (you can take out subscription one month at a time for one-off tasks like this). Within the crawl diagnostics panel duplicate content will come up as an error in red, from here you can drill down into the issues and prioritse your work. The full crawl of your site can take up to 7days (depending on size etc) so please bear that in mind!

Option 3. Utilise the Google "site:" command

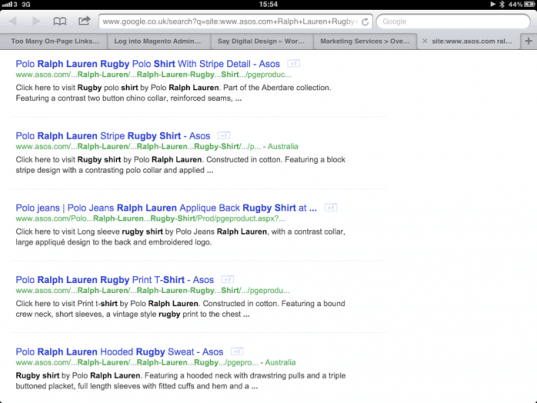

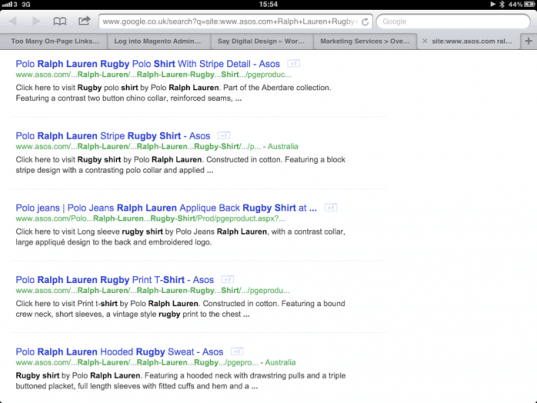

Select sample generic or product keyphrases in quotes reviewing the site using the Google site: command. To create the screen shot below I typed the following into Google

site:www.asos.com Ralph Lauren Rugby Top

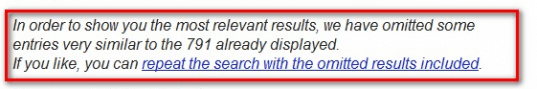

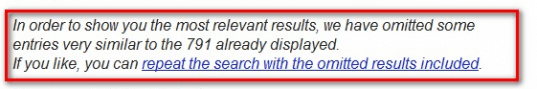

From here you can identify if titles / descriptions are the same, you may also receive a message like below which means that Google has identified them as duplicate pages and has down graded them to its secondary index.

From here you can identify if titles / descriptions are the same, you may also receive a message like below which means that Google has identified them as duplicate pages and has down graded them to its secondary index.

Option 4. Screaming SEO Frog / Xenu

I have mentioned these two tools a number of times in previous blog posts. They are famed for their ability to report back broken links on your website but they also return information which once in Excel you can use to identify duplciate URL's, Titles & descriptions. Just by using the sort functions & the formula "=A1=A2" (swap column & row as required) you can easily see where there are potential issues. If the excel formula returns the value "true" then the two rows are identical & therefore a duplicate.

Option 5. Google Analytics Data

Another option is to review landing pages in web analytics that aren’t receiving any natural visitors or their natural search traffic is low compared to other traffic across the page (sometimes called SEO inactive pages). To do this have an excel file with all your pages in, then apply an advance segment to your reports in Google Analytics so that it only includes traffic from organic search, compare the two reports to identify those pages which are not receiving organic traffic.

Once you have identified duplicate content which should be attracting visitors but isn't the next task is to identify why this is happening and try to prevent it. That's the subject of my next post.