A new report shows over half of web traffic comes from bots

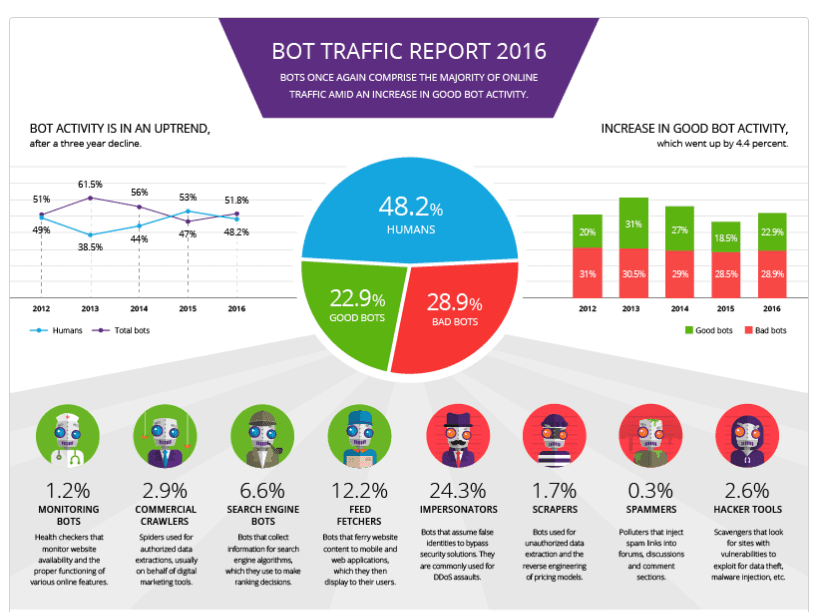

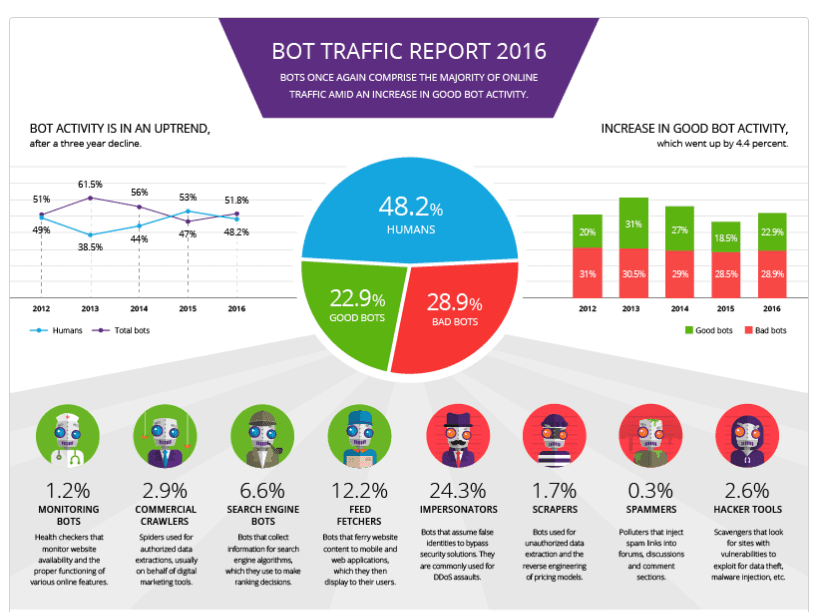

Ever year Incapsula release their bot traffic report, and every year I can't help but be shocked by quite how online traffic is comprised of bots. This year was particularly shocking but human traffic fell below the 50% mark, meaning that the majority of web traffic is now comprised of bots. And before you go thinking; 'that just must be wrong, how could there be that many bots?', the report analyzed 6.7 billion web visits across 100,000 randomly selected domains. With a sample size almost as large as the total population of the planet earth, it's likely that this finding is not far off the mark.

Bots aren't necessarily bad, many perform useful functions which the internet would not function properly without. However, the report also analyzed the nature of the bots, dividing them into the 'good bots' which provide useful functions, and 'bad bots' which damage users experience and hurt advertisers or site owners.

Worryingly it found that the bad bots are outnumbering the good ones, and account for a troubling 29% of all web traffic. Bad bots deliberately attempting to impersonate real users are often responsible for DDoS attacks. You can see a breakdown of the bots in the graphic below:

This means almost one in every three web visits was by an attack bot looking to negatively impact a site. More than 94% of the domains analyzed had suffered some form of bot attack in the last 90 days. Smart Insights certainly counts itself among that number. You may recently have noticed we've had to take measures to stop a distributed denial of service attack that we have been under for a few days. These measures unfortunately increase site load times, but without them our site would go down.

Just because 52% of traffic is from bots doesn't mean your analytics are all out by a factor of 2 though. Whilst bots will show up in the logs, most good bots won't show up in Google analytics because Google analytics knows not to count them. Search engine bots for example will not be counted in analytics, nor will feed fetchers usually. However impersonator bots are deliberately trying to imitate a users, and thus often will wrongly inflate view totals in analytics. This leads to a key problem that bots pose for marketers- ad fraud.

The problem of ad fraud

Aside from the problem of DDoS attacks, there is the issue of ad fraud which is particularly pertinent to digital marketers at the moment. P&G announced last week that it would be withdrawing all funding from any digital ad providers that did not sign up to its standards on transparency and ad fraud. If a publisher is charging an advertiser a certain amount per 1,000 impressions, and a large chunk of these 1,000 impressions are coming from bots, is the publisher guilty of ad fraud? They certainly are if they are deliberately using bots to inflate the impressions, but if they are unaware that bots were responsible for the impressions, does that absolve them?

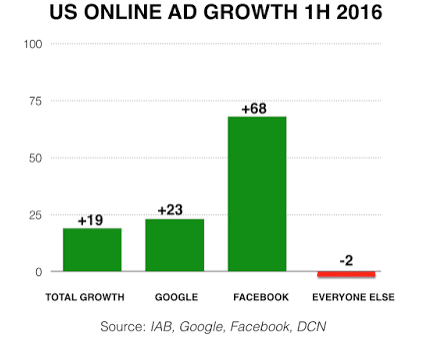

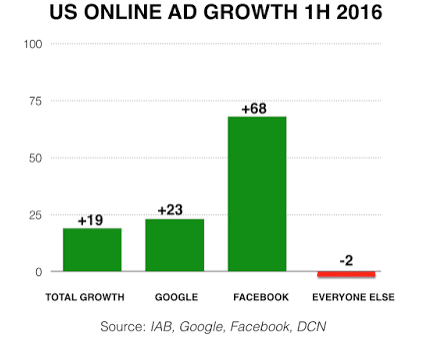

Another problem is that digital advertising is dominated by two huge 'walled gardens'- Facebook and Google, who now account for the vast majority of ad spend.

Whilst it would be relatively easy for large advertisers such as P&G (who are the world's largest advertiser) to get small online ad providers to open up their systems and agree to transparency, it will be far hard to make Google and Facebook play by the same rules. Both have every reason to want to keep their data to themselves and make brands use their metrics, rather than standardizing metrics to give greater transparency. Both Google and Facebook are considerably larger than P&G and are far more able to ignore their demands than smaller firms.

Marketers need to realise that we are currently at a critical juncture in online advertising. Will a major crackdown on bots and ad fraud via a coordinated effort by advertisers and publishers lead to better-trusted metrics and greater confidence in online advertising? Or will the sheer number of bots cause major advertisers to pull out of online advertising, costing publishers dearly.